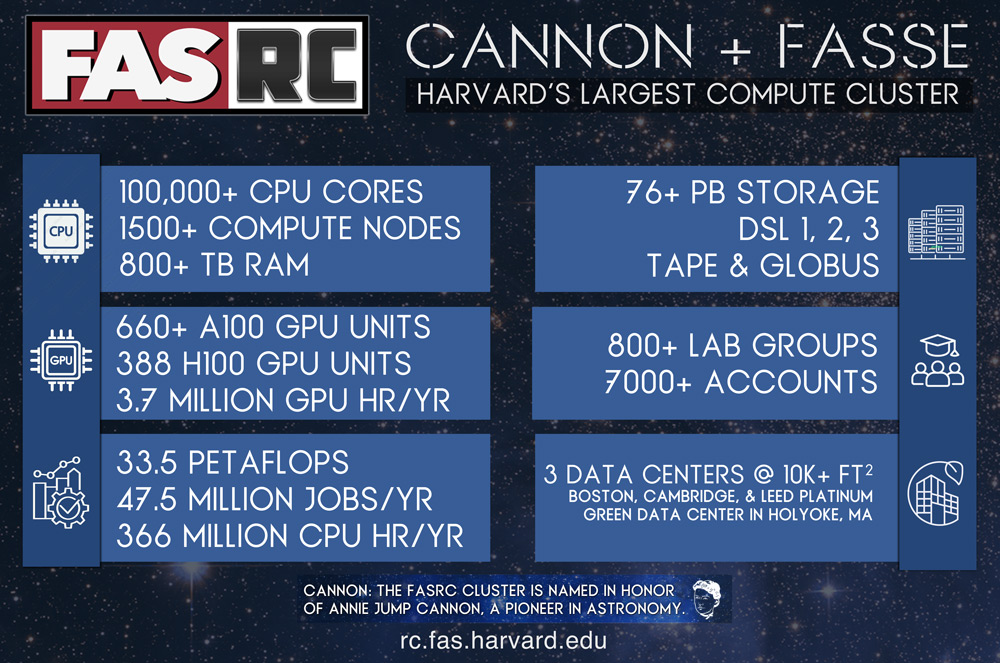

The FASRC Cannon compute cluster is a large-scale HPC (high performance computing) cluster supporting scientific modeling and simulation for thousands of Harvard researchers. Assembled with the support of the Faculty of Arts and Sciences, but since branching out to serve many Harvard units, it occupies more than 10,000 square feet with hundreds of racks spanning three data centers separated by 100 miles. The primary compute is housed in MGHPCC, our green (LEED Platinum) data center in Holyoke, MA. Other systems, including storage, login, virtual machines, and specialty compute, are housed in our Boston and Cambridge facilities.

CPU Compute: The core of the Cannon cluster ("Cannon actual") is comprised of Lenovo SD650 NeXtScale servers, part of their new liquid-cooled Neptune line. Each chassis unit contains two nodes, each containing two Intel "Cascade Lake" or Sapphire Lake" processors. The nodes are interconnected by HDR and NDR fabric in a single Fat Tree with a 400 Gbps IB core. The liquid cooling allows for efficient heat extraction while running higher clock speeds. Each Cannon node is several times faster than any previous Odyssey cluster node.

While the total number of nodes has been reduced compared to the previous cluster, the power of those nodes has increased dramatically.

GPU Compute: Our GPU footprint has grown over recent years to the number you see above. This total incorporates GPUs from the Kempner Institute and SEAS partitions which are available to all when not in use. All GPU nodes are connected via 100 Gbps InfiniBand.

Storage: FASRC now maintains over 60 PB of storage, and this keeps growing. Robust home directories are housed on enterprise-grade Isilon storage, while faster Lustre filesystems serve more performance-driven needs such as scratch and research shares. Our middle tier laboratory storage uses a mix of Lustre, CEPH, and NFS filesystems. See our Data Storage page for more details.

Interconnect: Cannon has two underlying networks: A traditional TCP/IP network and low-latency InfiniBand networks that enable high-throughput messaging for inter-node parallel-computing and fast access to Lustre mounted storage. The IP network topology connects the three data centers together and presents them as a single contiguous environment to FASRC users.

Software: Our core operating system is Rocky Linux. We maintain the configuration of the cluster and all related machines and services via Puppet. Cluster job scheduling is provided by SLURM (Simple Linux Utility for Resource Management) across several shared partitions, processing approximately 45,600,000 jobs per year. See our documentation on running jobs.

As well as supporting cluster modules, we manage over a thousand different scientific software tools and programs. The FASRC user portal is a great place to find out more information on software modules, check on job status, and submit a help request. A license manager service is maintained for software requiring license checkout at run-time. Other services to the community include the distribution of individual use software packages and Citrix and Virtual OnDemand (VDI) instances that allow for remote display and execution of various software packages.

Internal Infrastructure: FASRC manages numerous machines which handle our internal infrastructure for authentication, account creation and management, documentation, help tickets, administration machines, out-of-band management, inventory, monitoring, statistics, and billing. FASRC supports over 7500 users in more than 800 labs.