We have some exciting news about new resources we want to share with you. In the summer of 2019 we invested in direct water cooling and were able to bring you the Cannon cluster with 32,000 of the fastest Intel Cascade Lake cores. This 8-rack block of compute has formed the core of our computational capacity for the past 2 years, and we have expanded it to its full 12-rack capacity with faculty purchases. Additional direct water cooling capacity is being built out for January 2023. At the same time we purchased 16 air-cooled GPU nodes (each with 4x NVidia V100s). These nodes were FASRC’s first major foray into a public block of GPU’s.

As part of that install though we knew that we were neglecting some significant use cases on the cluster, namely those requiring more than 192 GB of RAM. This was also born out in the Cluster User Survey we did earlier this year where the most popular request was for new high memory nodes. We had promised to rectify this with a new install at a later date. That later date is now.

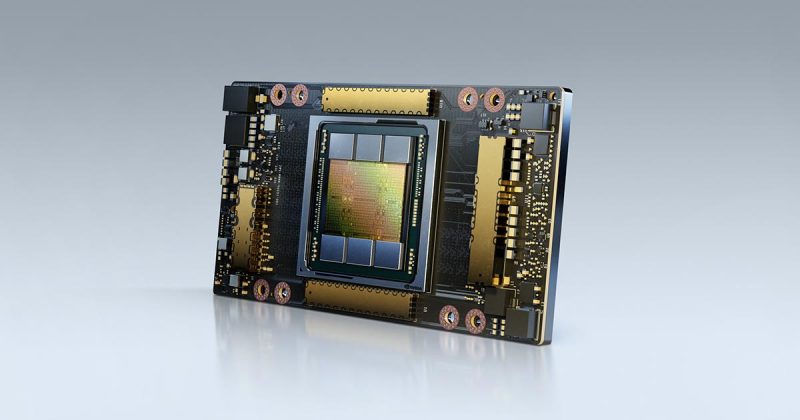

We are pleased to present two new blocks of compute that will significantly augment Cannon’s research capabilities. This was part of the work that was completed during the annual MGHPCC outage in mid-August. The first is a new block of 18 water cooled GPUs nodes, each with NVidia SXM4 (4x A100 cards). These new cards are blazing fast and will mark a substantial upgrade in our GPU footprint. The second is a new block of 36 (6 dedicated to the FASSE cluster) water cooled “big mem” CPU nodes, each with 2x Intel 8358 processors (64 cores total) and 512 GB of RAM. This will allow us to replace our old AMD high memory nodes which have been our workhorse for over 8 years. We are also planning on making further purchases of ultra high memory nodes (1TB+) in the future, so look forward to those new nodes down the line.

This new hardware will increase our computational power by roughly 25%. As a result we have recalculated the base gratis fairshare for all groups, and will increase from 100 to 120. This new base fairshare score will be applied to all groups when the new hardware is made live.

Nvidia A100

Speaking of which, that cut over will occur on September 30th. Here are the following changes to expect starting Thursday, September 30th.

- The gpu partition will be cut over to the new A100 hardware. Existing jobs in that partition will seamlessly move over to the new hardware. If you have jobs that are specifically built to run only on the V100 hardware please rearchitect them for the A100. You will need to use the latest version of Cuda to use the new GPU’s.

The bigmem partition will be cut over to the new Ice Lake hardware. Existing jobs in that partition will seamlessly move over to the new hardware. Be advised that we will be instituting a 7 day maximum runtime on the bigmem partition. The new hardware is an order of magnitude faster so the vast majority of jobs should be able to fit. - The gpu_test partition will be expanded using the V100’s from the current gpu partition. We will increase the maximum number of jobs allowed as well as the maximum amount of memory, cores, and GPU’s permitted to more closely match what the test partition permits. This should allow for a partition for fast turn around of shorter GPU jobs (< 8 hours) and make the gpu_test partition much more useful than it is now.

- All FASRC owned partitions running K80 or older GPU hardware will be decommissioned. This includes fas_gpu and remoteviz. Users of these partitions should submit to the upgraded gpu partition.

- Owners of private partitions with GPU hardware of K80 generation or older should expect to be contacted by us to schedule decommissioning by the end of FY22. We will need this space for upcoming new installations. As well this 6+yr old hardware is not power or cooling efficient compared to the amount of processing power that it provides.

- huce_amd_bigmem and the old AMD based bigmem nodes will be decommissioned as they are out of warranty. Users are encouraged to leverage the newer and 10x faster bigmem nodes.

- Gratis fairshare will be set to 120 for all labs on the cluster.

If you want testing access to these new nodes, please send in a ticket to rchelp@rc.fas.harvard.edu <mailto:rchelp@rc.fas.harvard.edu>

That’s all the changes to expect on September 30th. None of these changes require a downtime for the cluster nor interruption of user workflow or jobs. Existing jobs will finish on the older nodes, but those queues are closed for new jobs, and servers will be decommissioned afterwards. We are very excited about the new hardware. We’ve increased the total compute capacity of Cannon by 1.7 PetaFlops, as well as solved an outstanding issue with regards to our high memory nodes.

As always if you have any questions, comments, or concerns do not hesitate to contact us.

FAS Research Computing