If you have been tuned-in to tech talk over the last couple years there have been certain buzzwords that have been making their way into the common vernacular, big data being one of them. But with any buzzword, the meaning behind it can often be murky as can the understanding of that meaning. In 2012, Citrix, the software giant, commissioned a survey to determine just how much the American public understood about another favorite tech buzzword: the cloud. The results were illuminating. When asked what "the cloud" is, a majority of respondents thought it was an actual cloud or something related to the weather and sky. Fifty-four percent claimed to never have used cloud services, even though 95% of them actually had through either online banking, shopping, social networking, or media. And 1 in 5 respondents reported that they pretended to know what the cloud was or how it worked when it was brought up in conversation.

Before we get to the size of big data, let's first define it. Big data, as defined by McKinsey & Company refers to "datasets whose size is beyond the ability of typical database software tools to capture, store, manage, and analyze." The definition is fluid. It does not set minimum or maximum byte thresholds because it is assumes that as time and technology advance, so too will the size and number of datasets. Big data is also not tied to one specific industry or sector. The collection and creation of data can be found everywhere. From "liking" something on Facebook or posting a photo, to traffic sensors placed across roadways, to online shopping logged by retailers, to scientific measurements, to ATMS, to video uploads, data is in a continual state of creation and it is becoming easier and cheaper to store it all.

So how big is big data? In 2012, IDC and EMC placed the total number of "all the digital data created, replicated, and consumed in a single year" at 2,837 exabytes or more than 3 trillion gigabytes. Forecasts between now and 2020 have data doubling every two years, meaning by the year 2020 big data may total 40,000 exabytes, or 40 trillion gigabytes. But how much of that data will prove useful? IDC and EMC estimate about a third of the data will hold valuable insights if analyzed correctly. Currently, only 23% of collected data has been deemed beneficial, and of that only 3% is tagged and only .5% has been analyzed. But if the data can be used, McKinsey estimates retailers could increase operating margin by 60% and national U.S. healthcare expenditures could be reduced by 8% per year.

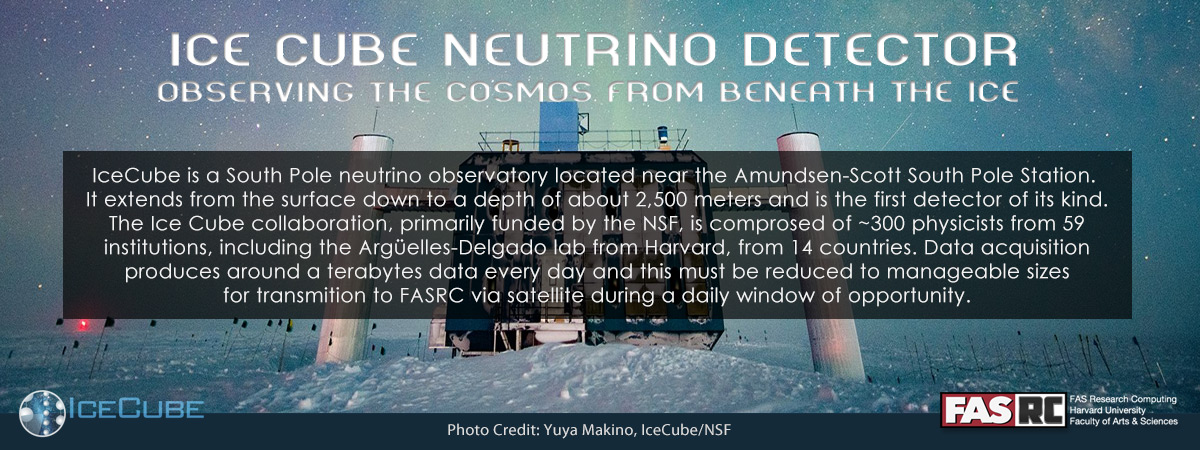

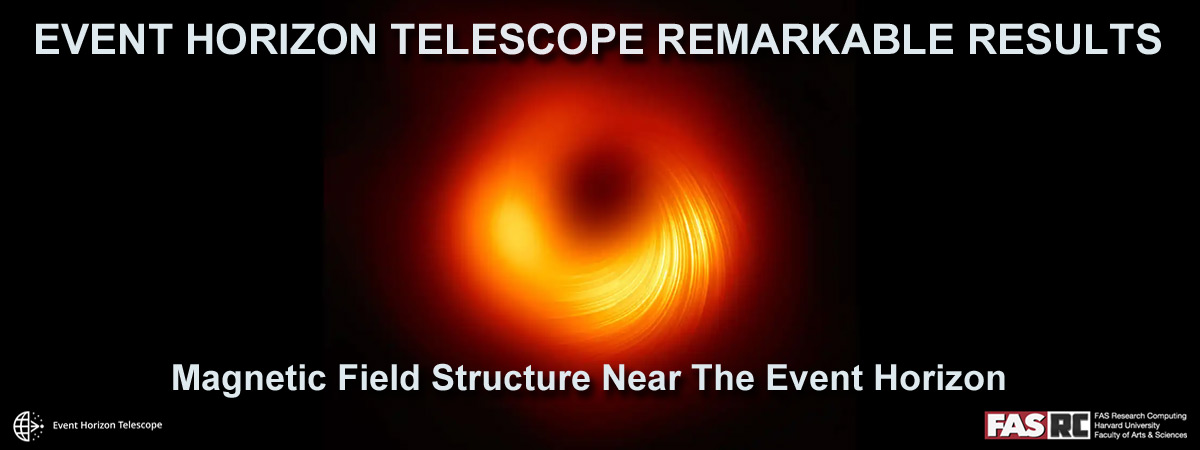

One of the major challenges of big data is how to extract value from it. We know how to create it and store it, but we fall short when it comes to analysis and synthesis. Projections show the U.S. is facing a shortage of 1.5 million managers and analysts to analyze big data and make decisions based on their findings. How to fill the big data skills gap is a major question leaders of companies and countries will need to answer in the coming years. But once the skills gap is addressed, big data is posed to have profound effects on healthcare, public administration, retail, manufacturing, and mobile applications. Scientific research will also see dramatic impacts as data intensive fields such as bioinformatics, climate change, and physics more effectively use computation to interpret observations and datasets.

We might not have all the answers to the challenges big data presents for our wired world, but as big data becomes a more central component of business success and scientific innovation, solutions will be sought and value will be created. We just need to look at the data.